Google's Project Genie | Google's mind-bending AI tool for infinite, interactive worlds

Google's Project Genie | Google's mind-bending AI tool for infinite, interactive worlds

Thursday, January 29, 2026

The world of AI is moving at an incredible pace. While we have grown accustomed to AI generating text, images, and even high-quality video, Google DeepMind has recently unveiled a breakthrough that takes generative technology into a brand-new dimension.

The world of AI is moving at an incredible pace. While we have grown accustomed to AI generating text, images, and even high-quality video, Google DeepMind has recently unveiled a breakthrough that takes generative technology into a brand-new dimension.

Oli Yeates

Oli Yeates

CEO & Founder

CEO & Founder

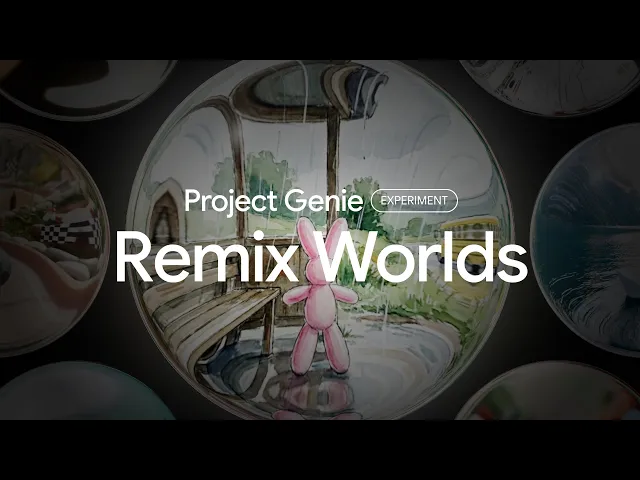

Known as Project Genie, this new foundational model represents a significant shift in how we think about digital creativity. It is not just another video generator; it is an AI that can create entire interactive, playable environments from a single prompt.

What is Project Genie?

At its core, Project Genie is a generative world model. It has been trained on hundreds of thousands of hours of video footage of 2D platforming games. However, what makes Genie remarkable is that it was trained without any labels or action data.

In traditional game development, an engineer must program the physics, the controls, and the way a character interacts with their surroundings. Genie has learned these concepts simply by watching videos. It understands the "physics" of a platformer – knowing that if a character jumps, it must come back down, and if it hits a wall, it should stop.

According to the official announcement on the Google DeepMind Blog, the model can take a single image, a sketch, or a text description and transform it into a functional, side-scrolling world that a human can actually play.

The Shift from Static to Interactive

We have seen the power of models like Sora and Gemini in creating visual content, but interactivity has always been the "final boss" for generative AI.

Most AI video models produce a linear sequence of frames. You can watch the video, but you cannot change what happens within it. Project Genie changes this by acting as a "foundation world model." Because it understands the underlying mechanics of the environment, it allows users to interact with the generated content in real time.

Why Does This Matter?

The implications for the creative industries are vast. Historically, building a game world required a team of artists and developers months of work. With models like Genie, the barrier to entry is lowered significantly. A designer could sketch a level on a napkin, take a photo, and have a playable prototype in seconds.

Beyond gaming, this technology serves as a vital stepping stone for General AI. By teaching models to understand the cause and effect of a 2D world, researchers are gaining insights into how AI might eventually understand the complexities of our 3D physical world.

A New Era for Creators

Project Genie is currently a research preview, but it signals a future where "creation" and "play" are no longer separate activities. We are entering an era where anyone with an idea can become a world-builder.

For those interested in the technical depth of this project, you can read the full research paper and view demonstrations on the Google DeepMind Project Page.

As AI continues to evolve, the line between watching a story and participating in one will only continue to blur. It is a fascinating time for the digital landscape, and we look forward to seeing how these world models are integrated into our daily creative tools.

Known as Project Genie, this new foundational model represents a significant shift in how we think about digital creativity. It is not just another video generator; it is an AI that can create entire interactive, playable environments from a single prompt.

What is Project Genie?

At its core, Project Genie is a generative world model. It has been trained on hundreds of thousands of hours of video footage of 2D platforming games. However, what makes Genie remarkable is that it was trained without any labels or action data.

In traditional game development, an engineer must program the physics, the controls, and the way a character interacts with their surroundings. Genie has learned these concepts simply by watching videos. It understands the "physics" of a platformer – knowing that if a character jumps, it must come back down, and if it hits a wall, it should stop.

According to the official announcement on the Google DeepMind Blog, the model can take a single image, a sketch, or a text description and transform it into a functional, side-scrolling world that a human can actually play.

The Shift from Static to Interactive

We have seen the power of models like Sora and Gemini in creating visual content, but interactivity has always been the "final boss" for generative AI.

Most AI video models produce a linear sequence of frames. You can watch the video, but you cannot change what happens within it. Project Genie changes this by acting as a "foundation world model." Because it understands the underlying mechanics of the environment, it allows users to interact with the generated content in real time.

Why Does This Matter?

The implications for the creative industries are vast. Historically, building a game world required a team of artists and developers months of work. With models like Genie, the barrier to entry is lowered significantly. A designer could sketch a level on a napkin, take a photo, and have a playable prototype in seconds.

Beyond gaming, this technology serves as a vital stepping stone for General AI. By teaching models to understand the cause and effect of a 2D world, researchers are gaining insights into how AI might eventually understand the complexities of our 3D physical world.

A New Era for Creators

Project Genie is currently a research preview, but it signals a future where "creation" and "play" are no longer separate activities. We are entering an era where anyone with an idea can become a world-builder.

For those interested in the technical depth of this project, you can read the full research paper and view demonstrations on the Google DeepMind Project Page.

As AI continues to evolve, the line between watching a story and participating in one will only continue to blur. It is a fascinating time for the digital landscape, and we look forward to seeing how these world models are integrated into our daily creative tools.

more BLogs

more BLogs